Last week we learnt how to create CloudEvents programmatically by using one of the many SDKs available. This week we will extend the CloudEvent created last week and we will send it to SAP Integration Suite, advanced event mesh.

Before we get to the challenge, we might need to talk a bit about what an Event-Driven architecture is and why it is so important. Let’s get started.

Links to March’s developer challenge:

- Week 1: https://community.sap.com/t5/application-development-discussions/march-developer-challenge-cloudeven…

- Week 2: https://community.sap.com/t5/application-development-discussions/march-developer-challenge-cloudeven…

- Week 3: https://community.sap.com/t5/application-development-discussions/march-developer-challenge-cloudevents-week-3/td-p/13642145

Event-Driven Architectures

Long gone are the days when a system (aka target system) will constantly poll to check if there are any changes in another system, e.g. a new customer created in a master data system. Traditionally, the target system will only know this by programming a routine that will poll a service exposed in the source system every X minutes/hours/days. The expectation nowadays is that systems are integrated and that the data exchanged between these systems will be immediately available in the target system(s) if any data is created/changed in the source system. Enter Event-Driven architectures.

An Event-Driven Architecture is a software architecture paradigm concerning the production and consumption of events. An event can be defined as a significant change in the state of an object within a system[1]. For example, when a customer/supplier/employee (business object) is created/updated/deleted (action) in a system. Translating this to the SAP world, when a Business Partner is created/changed in SAP S/4HANA (source system), SAP S/4HANA can notify that there was a change in a business object and target system(s) interested in the Business Partner object can then react (do something about it) if they need to. This could be create/update/delete the object in their systems.

How do source and target systems communicate?

Now, if the source system lets other systems know of any changes happening in its business objects, it will not be sustainable to create a new programming routine within the source system every time we want to notify a new target system of any changes. Traditionally we would have some form of middleware, e.g. SAP Cloud Integration, and configure our source system, an SAP S/4HANA system, to send notifications of these events to the middleware and then use the middleware to distribute these messages, e.g. we would add target system(s) as needed. Now, we are moving the problem from the source system to a sort of middleware but ideally, there will be a way for the source system to notify others without the need to make any changes. Enter the event broker.

An event broker is message-oriented middleware that enables the transmission of events between different components of a system, acting as a mediator between publishers and subscribers. It is the cornerstone of event-driven architecture, and all event-driven applications use some form of event broker to send and receive information[2].

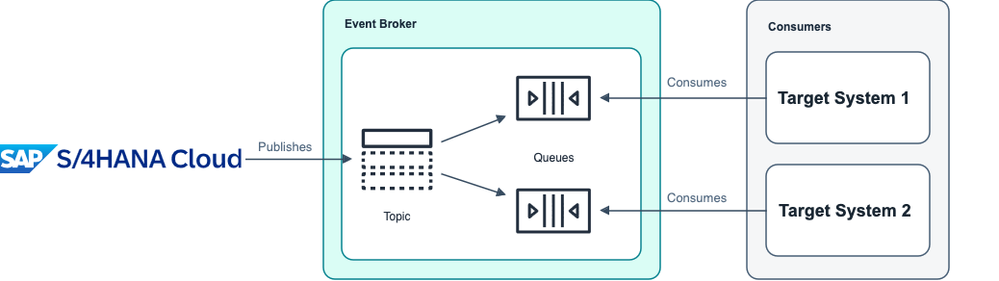

By introducing an event broker in our landscapes, we can configure our source systems to publish their events to this message-oriented middleware. The source system will specify the class of the message (aka topic). Then, systems interested in the changes happening, in a particular business object, in the source system can subscribe to the event(s), via the event broker, by specifying the topic they are interested in. There are two keywords important here, publish and subscribe (PubSub), this is a well-known messaging pattern used to decouple systems/applications and allow communication between them.

What is the PubSub messaging pattern?

Publish-subscribe is a communication pattern that is defined by the decoupling of applications, where applications publish messages to an intermediary broker rather than communicating directly with consumers (as in point-to-point)[3]. In a way, publishers and consumers do not need to know each other; they simply publish (produce) or consume (receive) the events. When following this messaging pattern we move from, the traditional polling mechanism to know if there have been any changes in the source system, to reacting to real-time events (notifications) the moment something happens in the source system.

We mentioned before how target systems can subscribe to events by specifying a topic they are interested in…. some event brokers will allow subscribers to subscribe to topics by using wildcards (*) and they will be able to receive messages for different topics. For example, let’s assume we have an SAP S/4HANA with the name S4D and it publishes the Business Partner create and change on the following topics: sap/S4HANAOD/S4D/ce/sap/s4/beh/businesspartner/v1/BusinessPartner/Created/v1 and sap/S4HANAOD/S4D/ce/sap/s4/beh/businesspartner/v1/BusinessPartner/Changed/v1. A subscriber system could subscribe to both topics using a wildcard, e.g. sap/S4HANAOD/S4D/ce/sap/s4/beh/businesspartner/v1/BusinessPartner/*/v1 and receive the message for both event types.

SAP offers different services that can act as event brokers. We will discuss this further in the SAP’s event-driven portfolio section.

With many systems in our landscapes and each one being developed by different vendors/teams, it would be good if there was a standard way of structuring these events to simplify how systems create/handle/process these messages right? This is why we first learnt about CloudEvents 😃.

Now, we can use the CloudEvents message format to exchange messages between systems/services/applications. In our case, we will publish a CloudEvent to a topic in one of SAP’s event-driven portfolio products: SAP Integration Suite, advanced event mesh.

SAP Integration Suite, advanced event mesh

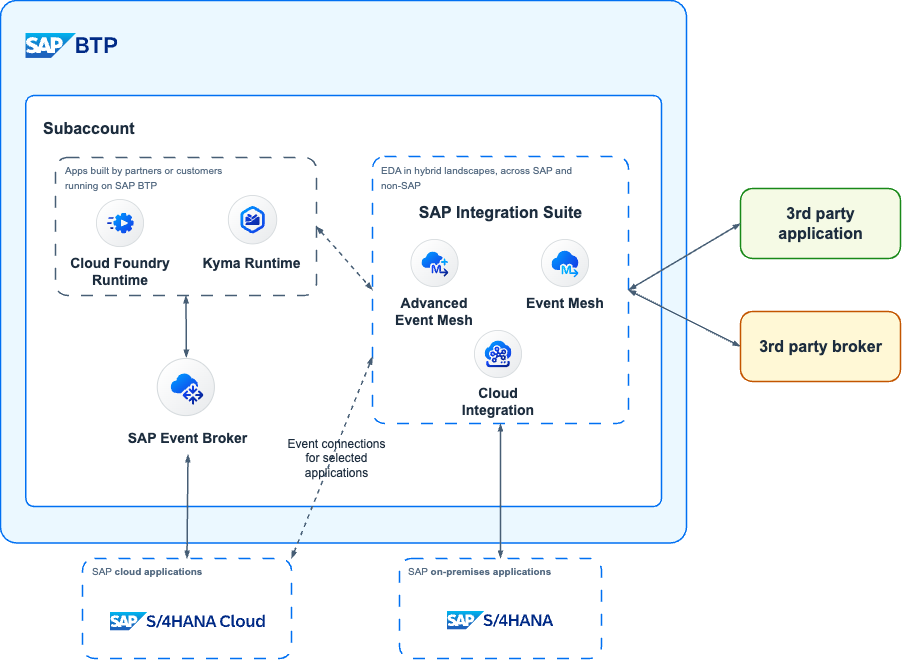

SAP offers various services that can help customers embrace event-driven architectures. The different offerings can meet customers where they are in their EDA adoption/implementation journey. These are:

- SAP Event Broker for SAP cloud applications

- SAP Event Mesh

- SAP Integration Suite, advanced event mesh

Now, for this week’s challenge we will focus on communicating SAP Integration Suite, advanced event mesh (AEM), which is a complete event streaming, event management, and monitoring platform that incorporates best practices, expertise, and technology for event-driven architecture (EDA) on a single platform. With AEM you can deploy event broker services, create event meshes, and optimize and monitor your event-driven system.

📢To learn more about SAP’s event-driven portfolio check out this blog post: CloudEvents at SAP 🌁 https://community.sap.com/t5/application-development-blog-posts/cloudevents-at-sap/ba-p/13620137.

Connecting with SAP Integration Suite, advanced event mesh (AEM)

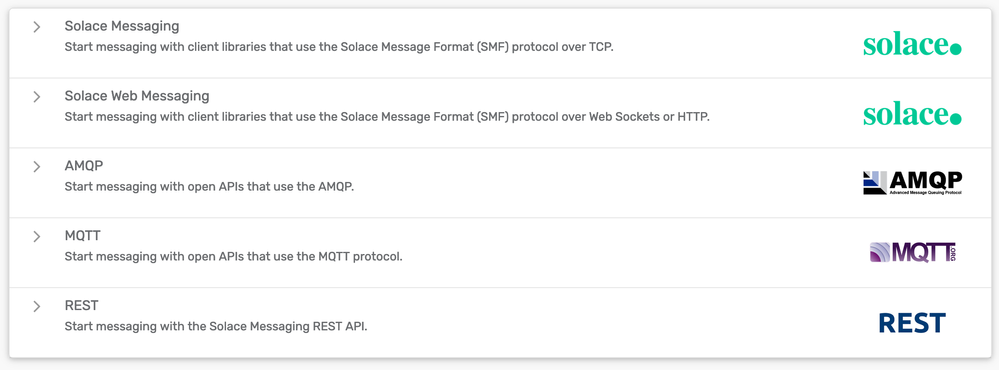

We can use different protocols to connect with AEM, this will depend on our needs and the type of service/device that will be publishing/consuming messages. The event broker provides a foundation for multi-protocol and standards eventing including Solace Message Format (SMF), JMS1.1, MQTT3.11, REST, and AMQP1.0[1].

Also, there are many connectivity options available depending on your favourite programming language.

Check out the tutorials available for the different programming languages – https://tutorials.solace.dev/.

To keep things simple for this developer challenge, we are going to use the REST Messaging Protocol[3] to send messages (publish) to the event broker by using HTTP POST requests. This uses standard HTTP, which we are all familiar with, meaning that you can send messages with any REST client, e.g. Bruno.

In our case, we are interested in producing messages so we will act as a REST producer and send messages to a topic in the event broker. A REST message sent from a publisher to an event broker consists of a POST request, HTTP headers, and an HTTP message body. The event broker uses the POST request to determine where to route the message, and the HTTP headers specify message properties that may determine how the message is handled. The body of the POST request is included as the message payload.

When an event broker successfully receives a REST message, it sends back an acknowledgement (“ack”) in the form of a POST response. An ack consists of a response code (typically 200 OK) and HTTP headers. Because the response code is sufficient to acknowledge receipt of the original message, the returned message has an empty message body.

We can also use the REST Messaging APIs to consume messages but this requires some additional configuration in the event broker, e.g. setting up a REST Delivery Point, aka Webhook.

We can send messages to a topic or a queue. Below are some examples of how we can send a message to a topic or a queue[2]:

-

Sending a message to a topic “a” (both commands are equivalent):

curl -X POST -d "Hello World Topic" http://<host:port>/a --header "Content-Type: text/plain" curl -X POST -d "Hello World Topic" http://<host:port>/TOPIC/a --header "Content-Type: text/plain"

-

Sending a message to a queue “Q/test”:

curl -X POST -d "Hello World Queue" http://<host:port>/QUEUE/Q/test --header "Content-Type: text/plain"

You’ll notice that in the examples above we are sending as the body of our message plain text. When sending a CloudEvent the Content-Type would be ‘application/cloudevents+json’

🔍Interested in knowing what Sending a CloudEvent to a topic looks like using curl?

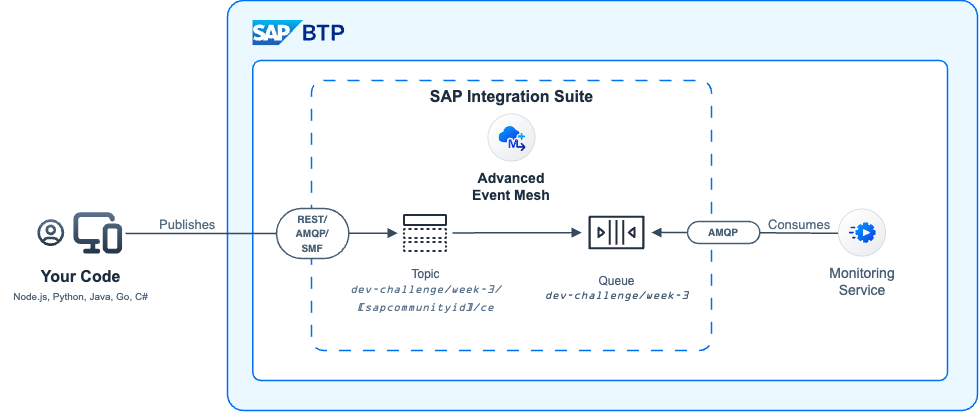

As part of this week’s challenge we will POST our CloudEvent to a topic. In our case, the topic will be /dev-challenge/week-3/{{sapcommunityid}}/ce and this is how we include it in the POST request URL: https://{{host}}:{{port}}/TOPIC/dev-challenge/week-3/{{sapcommunityid}}/ce

Week 3 challenge – Send CloudEvent to SAP Integration Suite, advanced event mesh

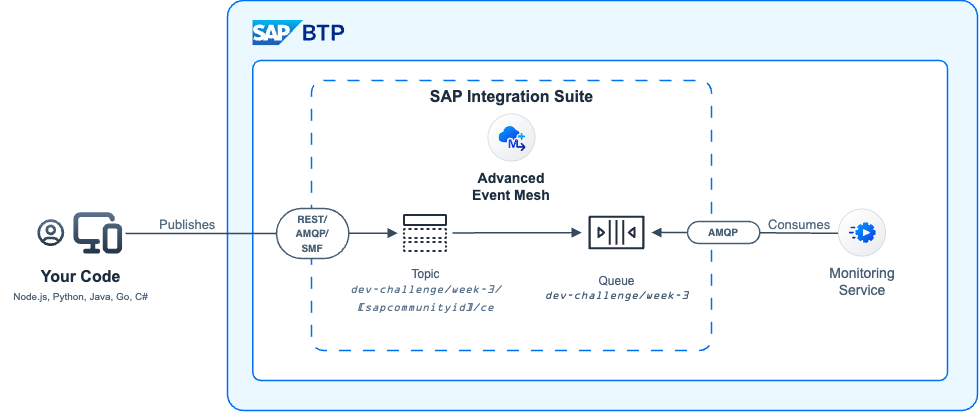

👉 Your task for this week is: Extend the CloudEvent you created for last week’s challenge and add an extension context attribute (sapcommunityid). You will need to specify your sapcommunityid, e.g. ajmaradiaga in my case, as a value here. Please ensure that you specify the sapcommunityid in the message, if not it will not count as valid. Note: The diagram above captures the data flow of this week’s challenge.

Once you’ve extended the original message, send the message to SAP Integration Suite, advanced event mesh – by publishing the message in the topic dev-challenge/week-3/[my-sap-community-id]/ce. In the comments, share a snippet of your code where the connectivity is taking place and post a screenshot of the successful run of your code. Use the credentials below to connect to AEM. Note: You can check that your message was received and consumed on this website: https://ce-dev-challenge-wk3.cfapps.eu10.hana.ondemand.com/consumed-messages/webapp/index.html.

For example, in my case I will publish the message to the topic dev-challenge/week-3/ajmaradiaga/ce

Bonus:

You can also try sending the CloudEvent message using a protocol different than REST, e.g. AMQP, SMF. There is no need to reinvent the wheel here… check out the code available in the Solace Samples org, e.g. for Node.js, Python and the tutorials available for the different programming languages. There are plenty of examples there that show you how to use the different protocols.

🚨Credentials 🚨

To communicate with AEM, you will need to authenticate when posting a message. In the section below you can find the credentials required to connect.

For the adventurous out there…. I’m also sharing the connection details for different protocols that we can use to communicate with AEM, e.g. AMQP, Solace Messaging, Solace Web Messaging. In case you want to play around and get familiar with different protocols.

🔐Expand to view credential details 🔓

- Connection Type:

REST: https://mr-connection-plh11u5eu6a.messaging.solace.cloud:9443

AMQP: amqps://mr-connection-plh11u5eu6a.messaging.solace.cloud:5671

Solace Messaging: tcps://mr-connection-plh11u5eu6a.messaging.solace.cloud:55443

Solace Web Messaging: wss://mr-connection-plh11u5eu6a.messaging.solace.cloud:443 - Username: solace-cloud-client

- Password: mcrtp5mps5q12lfqed5kfndbi2

- Message VPN: eu-fr-devbroker

✅Validation process for this week’s challenge ❌

As part of the validation process for this week’s challenge, there is a consumer service (Monitoring service in the diagram above) that will process the messages sent. The consumer program will validate that the message you post includes an extension context attribute (sapcommunityid) and this will need to match the path specified for the topic. As for previous weeks, I will kudo a successful response and I will post every day the SAP Community IDs of the messages received and processed successfully.

You can monitor the messages processed by the consumer service on this website: https://ce-dev-challenge-wk3.cfapps.eu10.hana.ondemand.com/consumed-messages/webapp/index.html. If there is an error in the message sent, the monitoring app will tell you what the error is, e.g. not a valid CloudEvent, sapcommunityid extension context attribute missing, or sapcommunityid doesn’t match community Id specified in topic. The consumer service is subscribed to the topics using a wildcard – dev-challenge/week-3/*/ce. Meaning that it will only process messages sent to topics that fit that pattern.

Below you can see a screenshot of a message processed successfully by the consumer service.

Example of a payload containing the extension context attribute sapcommunityid:

Example of a payload containing the extension context attribute sapcommunityid:

“specversion”: “1.0”,

“id”: “204f70c0-1301-4812-9705-8db7ab57065e”,

“source”: “https://tms-prod.ajmaradiaga.com/tickets”,

“type”: “com.ajmaradiaga.tms.Ticket.Created.v1”,

“time”: “2024-03-17T12:34:03.180643+00:00”,

“data”: {

“id”: “IT00010232”,

“description”: “Install ColdTurkey to block distracting websites.”,

“urgency”: {

“id”: 1,

“description”: “High”

}

},

“sapcommunityid”: “ajmaradiaga”

}